There are environments such as finance, mobility, and integrity enforcement, where machine learning (ML) systems must be more than just accurate—they must be explainable and justifiable. Stakeholders, from regulators to internal policy teams and end-users, need to understand not only what a model predicts but why it arrives at those conclusions.

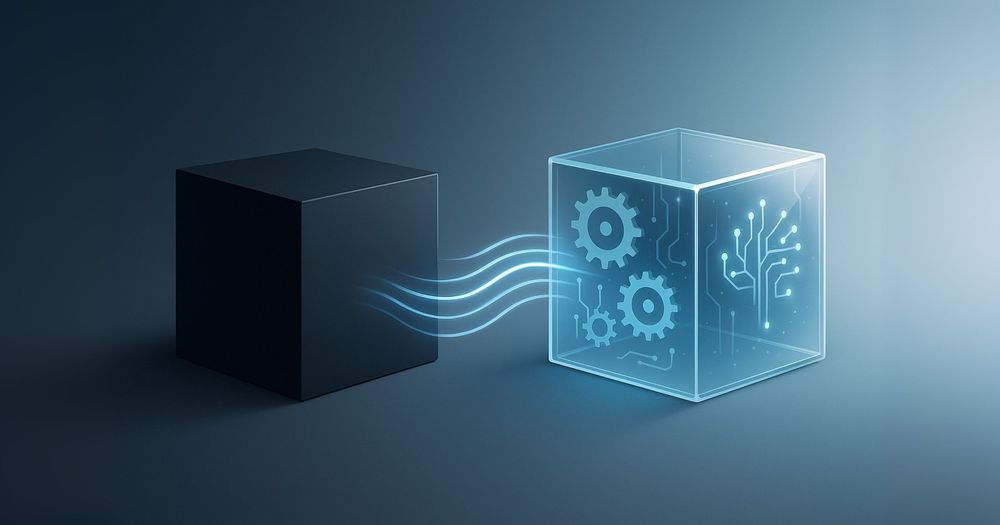

I have worked across domains that are highly regulated, and where safety and trust are key: from autonomous driving systems to financial and integrity-focused platforms. I got the opportunity to experience firsthand that black-box models are just not enough.

In this article, we’ll explore why explainability can matter more than raw accuracy, how to choose the right interpretability tools, and what it takes to design systems where compliance, policy, and UX are part of the foundation—not an afterthought.

Accuracy Isn’t Always Enough: Why Explainability Matters

In critical areas ML system decisions directly impact lives and reputations. Errors in credit scoring, integrity models, or vehicle perception can lead to severe consequences.

Common examples:

- In finance, regulators establish the right to explanation under frameworks like GDPR Article 22. This requires models used for risk profiling or fraud detection to be transparent and auditable.

- In automotive, explainability is fundamental in establishing accountability; developers and functional safety engineers must be able to trace and interpret system behavior, especially during incident investigations.

- In integrity systems, flagging or restricting a user, a business, or an entity based on ML predictions must be defensible to internal reviewers, legal queries, even, the public. This is not just about being fair, but often public trust or regulatory compliance.

In all three domains, explainability builds trust—with regulators, users, and within the organization.

Domain-Specific Explainability Requirements

Financial Systems

Financial ML systems serve multiple stakeholders with distinct needs:

- Regulators require transparency for fairness and anti-discrimination compliance

- Customers deserve clear explanations when applications are rejected

- Internal teams need audit trails linking decisions to specific model versions and feature values

Key Implementation Strategies:

Reason Codes: Standardized, user-readable explanations for decisions like loan denials or transaction flags.

Counterfactual Examples: "What would need to change for approval?" scenarios that guide customers and support appeals processes.

Version-Controlled Audit Trails: Every decision logged with model version, feature values, thresholds, and timestamps for regulatory compliance.

Fairness Monitoring Dashboards: Real-time tracking of group-level fairness metrics to identify potential discrimination.

Autonomous Systems

Automotive AI demands explainability that connects algorithmic decisions to human understanding:

Critical Requirements:

- Clear attribution for vehicle behaviors (braking, lane changes, speed adjustments)

- Sensor-to-decision traceability for incident analysis

- Real-time decision logging for safety validation

Implementation Approaches:

End-to-End Traceability: Complete logs linking raw sensor data (lidar, camera, IMU) to specific control decisions with confidence scores and thresholds.

Visual Decision Overlays: Heatmaps and annotated footage showing which image regions influenced object detection or path planning decisions.

Simulation-Backed Analysis: Post-incident tools allowing engineers to modify environmental parameters and test alternative scenarios.

Integrity Systems

Content moderation and abuse detection systems require explanations that satisfy legal, policy, and operational review processes:

Core Needs:

- Clear rationale for enforcement actions

- Feature audits ensuring geographic or linguistic bias doesn't drive decisions

- Model cards documenting assumptions, limitations, and intended use cases

What does an Explainability Toolbox Include?

Practitioners have developed a toolkit that includes several interpretability techniques that help understand how complex models make decisions. Here are some of the most common tools, with examples of how to apply them in real-world contexts:

LIME: Local Model Approximation

LIME excels at explaining individual predictions by approximating complex model behavior with interpretable local models.

Financial Example: When explaining loan rejections, LIME might reveal that a high loan amount (-0.34 contribution) and short employment history (-0.22) were the primary factors, while a good credit score (+0.17) provided some positive influence. This granular breakdown enables clear customer communication and internal decision validation.

SHAP: Game-Theoretic Feature Attribution

SHAP provides theoretically grounded feature importance scores using Shapley values from cooperative game theory.

Fraud Detection Application: For a transaction flagged with 83% fraud probability, SHAP might show that a high-risk merchant (+0.27), foreign IP address (+0.19), and unusual timing (+0.14) contributed most to the decision, while familiar device ID (-0.12) provided reassurance. This breakdown supports both operational decisions and regulatory reporting.

Anchors: Rule-Based Explanations

Anchors generate precise conditional rules that capture model behavior with high confidence.

AML Monitoring: An anchor might identify that transactions over $100,000 from accounts less than 30 days old to offshore jurisdictions trigger review 98% of the time—providing compliance teams with clear, actionable rules for manual review prioritization.

Visual Explainability: Grad-CAM and Saliency Methods

For computer vision applications, gradient-based methods highlight which image regions most influenced model decisions.

Autonomous Driving: When a pedestrian detection system triggers unexpected braking, Grad-CAM can reveal whether the model focused on legitimate pedestrian-like shapes or spurious features like shadows or signage—critical for safety validation and model improvement.

Fairness Metrics: Bias Detection and Mitigation

Systematic fairness evaluation prevents discriminatory outcomes across protected groups.

Credit Approval Monitoring: Tracking metrics like demographic parity (equal approval rates), equal opportunity (equal true positive rates among qualified applicants), and predictive parity (equal precision across groups) ensures compliance with fair lending regulations while identifying potential model biases.

Cross-Functional Integration: Beyond Technical Implementation

Successful explainability requires coordination across disciplines:

Legal & Compliance: Need audit-ready documentation and clear decision rationale for regulatory defense.

Policy & Operations: Require explanations that map directly to policy violations and enforcement guidelines.

UX & Product: Must translate technical explanations into user-friendly communications that build rather than erode trust.

Engineering: Should embed explainability into system architecture from design through deployment, not as an afterthought.

It's not Only About the Tools: Design for Interpretability

Explainability is not something you add retrospectively. It must be incorporated into the system from day one.

Best Practices:

- Prefer interpretable models (e.g., decision trees, GAMs) where performance allows.

- Keep feature spaces easy for humans to comprehend.

- Include explanation generation in model-serving infrastructure.

- Document and version model behavior for traceability.

In high-risk environments, the most successful ML systems are not just accurate, but introspectable and accountable by design.

Final Thoughts

Explainability is very often not a luxury. It is a requirement. It doesn't matter if you're classifying transactions. If you are managing vehicle perception systems, or enforcing platform integrity policies. Being able to explain model behavior is essential for trust, safety, and regulatory compliance.

As engineers and leaders, we must design systems that can justify their decisions. We need to invest in the right tools, and collaborate with cross-functional teams to build models with a logic that is easy to explain.

References

- “Why Should I Trust You?” Explaining the Predictions of Any Classifier

Marco Tulio Ribeiro, Sameer Singh, Carlos Guestrin - “A Unified Approach to Interpreting Model Predictions”

Scott Lundberg and Su-In Lee (2017) - “Anchors: High-Precision Model-Agnostic Explanations”

Marco Tulio Ribeiro, Sameer Singh, Carlos Guestrin - Alibi Explainability Toolkit

- Anchors Documentation

- “Grad-CAM: Visual Explanations from Deep Networks via Gradient-Based Localization”

Selvaraju et al. (2017) - PyTorch Grad-CAM Implementation

- “SmoothGrad: removing noise by adding noise”

Smilkov et al. (2017, Google Brain) - “Fairness and Machine Learning: Limitations and Opportunities”

Solon Barocas, Moritz Hardt, Arvind Narayanan